Batch Effect Correction in Multi-Site Embryo Studies: Strategies for Robust Data Integration and Biological Discovery

Integrating single-cell and spatial transcriptomic data from multiple sites and studies is essential for building comprehensive models of embryonic development but is severely challenged by technical batch effects.

Batch Effect Correction in Multi-Site Embryo Studies: Strategies for Robust Data Integration and Biological Discovery

Abstract

Integrating single-cell and spatial transcriptomic data from multiple sites and studies is essential for building comprehensive models of embryonic development but is severely challenged by technical batch effects. This article provides a foundational to advanced guide for researchers and drug development professionals, exploring the profound impact of batch effects on biological interpretation and reproducibility. It details current methodological solutions, from established algorithms to novel order-preserving and deep learning approaches, and provides a practical framework for troubleshooting design flaws and optimizing correction performance. Finally, it outlines rigorous validation and comparative analysis strategies to ensure corrected data is reliable for downstream applications such as cell lineage prediction and embryo model authentication, ultimately aiming to enhance the fidelity of cross-study biological insights in embryology.

Understanding Batch Effects: Why Technical Noise Threatens Embryonic Development Research

Troubleshooting Guide: Frequently Asked Questions

FAQ 1: What are the most common sources of batch effects in multi-site studies? Batch effects are technical variations unrelated to the study's biological objectives and can be introduced at virtually every step of a high-throughput experiment [1]. The table below summarizes frequent sources encountered during different phases of a typical study [1]:

| Stage | Source | Impact Description |

|---|---|---|

| Study Design | Flawed or Confounded Design | Selecting samples based on specific characteristics (age, gender) without randomization; minor treatment effect sizes are harder to distinguish from batch effects [1]. |

| Sample Preparation & Storage | Protocol Procedure | Variations in centrifugal force, time, or temperature before centrifugation can alter mRNA, protein, and metabolite measurements [1]. |

| Sample Preparation & Storage | Sample Storage Conditions | Variations in storage temperature, duration, or number of freeze-thaw cycles [1]. |

| Data Generation | Different Labs, Machines, or Pipelines | Systematic differences from using different equipment, laboratories, or data analysis workflows [1] [2]. |

| Longitudinal Studies | Confounded Time Variables | Technical variables like sample processing time can be confounded with the exposure time of interest, making it impossible to distinguish true biological changes from artifacts [1]. |

FAQ 2: What is the real-world impact of uncorrected batch effects? The impact ranges from reduced statistical power to severe, real-world consequences, including irreproducible findings and incorrect clinical conclusions [1] [2].

- Incorrect Conclusions and Misguided Treatments: In one clinical trial, a change in the RNA-extraction solution caused a shift in gene-based risk calculations. This resulted in 162 patients being misclassified, 28 of whom subsequently received incorrect or unnecessary chemotherapy regimens [1] [2].

- Misinterpretation of Biological Differences: A study initially reported that differences between human and mouse species were greater than the differences between tissues within the same species. However, this was later shown to be a batch effect because the data from the two species were generated three years apart. After batch correction, the data clustered by tissue type, not by species [1].

- Retracted Research and Economic Loss: Batch effects are a paramount factor contributing to the "reproducibility crisis" in science. For example, a study on a fluorescent serotonin biosensor published in Nature Methods was retracted after it was discovered that the sensor's sensitivity was highly dependent on the specific batch of a reagent (fetal bovine serum), making the key results unreproducible [1].

FAQ 3: Our study has a completely confounded design. Can we still correct for batch effects? Yes, but it requires a specific experimental approach. In a confounded scenario where all samples from biological group A are processed in one batch and all from group B in another, it is statistically impossible to distinguish biological differences from technical batch variations [2]. Standard correction methods fail or may remove the biological signal of interest [2].

The most effective solution is to use a reference-material-based ratio method [2]. By profiling a well-characterized reference material (e.g., a standard sample) in every batch alongside your study samples, you can transform the absolute expression values of your study samples into ratios relative to the reference. This scaling effectively corrects for inter-batch technical variation, even in completely confounded designs [2].

FAQ 4: Are batch effects still a relevant concern with modern, large-scale datasets? Yes, batch effects remain a critical concern in the age of big data [3]. The problem has become more complex with the rise of single-cell omics technologies and large-scale multi-omics studies, which involve data measured on different platforms with different distributions and scales [1] [3]. The increasing volume and variety of data make issues of normalization and integration more prominent, not less [3].

Batch Effect Correction Algorithms (BECAs) at a Glance

The following table summarizes several common batch effect correction methods, highlighting their applicability in different study scenarios based on a large-scale multi-omics assessment [2].

| Method | Core Principle | Applicable Scenario (Balanced/Confounded) | Key Consideration |

|---|---|---|---|

| Ratio-based Scaling (e.g., Ratio-G) | Scales feature values of study samples relative to a concurrently profiled reference material [2]. | Both Balanced and Confounded [2] | Requires running reference samples in every batch; highly effective in confounded designs [2]. |

| ComBat | Empirical Bayes framework to adjust for additive and multiplicative batch biases [2] [4]. | Balanced [2] | Can introduce false signals if applied to an unbalanced, confounded design [2] [5]. |

| Harmony | Iterative PCA-based dimensionality reduction to align batches [2] [4]. | Balanced [2] | Output is an integrated embedding, not a corrected expression matrix, limiting some downstream analyses [4]. |

| Per Batch Mean-Centering (BMC) | Centers the data by subtracting the batch-specific mean for each feature [2]. | Balanced [2] | Simple but generally ineffective in confounded scenarios [2]. |

| SVA / RUVseq | Models and removes unwanted variation using surrogate variables or control genes [2]. | Balanced [2] | Performance can be variable and depends on the accurate identification of negative controls or surrogate variables [2]. |

Experimental Protocol: Implementing a Reference-Material-Based Ratio Correction

This protocol is designed to mitigate batch effects in a multi-site study, even with a confounded design, by using the ratio-based method validated in large-scale multi-omics studies [2].

1. Preparation and Design

- Select Reference Material: Choose a stable, well-characterized reference material that is representative of your sample type. In multi-omics studies, reference materials derived from immortalized cell lines (e.g., Quartet Project reference materials) are used [2].

- Experimental Plan: Integrate the reference material into every batch of your experiment. Ideally, include multiple technical replicates of the reference in each batch to account for technical variability [2].

2. Data Generation

- Process study samples and reference material replicates concurrently in every batch across all sites. Consistency in timing and protocol is critical [2].

- Generate raw omics data (e.g., transcriptomics, proteomics) for all samples and references using your standard platforms.

3. Data Processing and Ratio Calculation

- For each individual feature (e.g., a specific gene or protein) in a given batch:

- Calculate the average abundance (e.g., read count, intensity) for the reference material replicates within that batch.

- For each study sample in the same batch, divide the feature's abundance by the average reference abundance calculated in the previous step.

- This yields a ratio-based value for every feature in every study sample [2].

- Repeat this process for all features and all batches.

4. Downstream Analysis

- The resulting dataset composed of ratio-scaled values can be integrated across batches for combined analysis, such as differential expression analysis, clustering, and predictive modeling, with minimized batch influence [2].

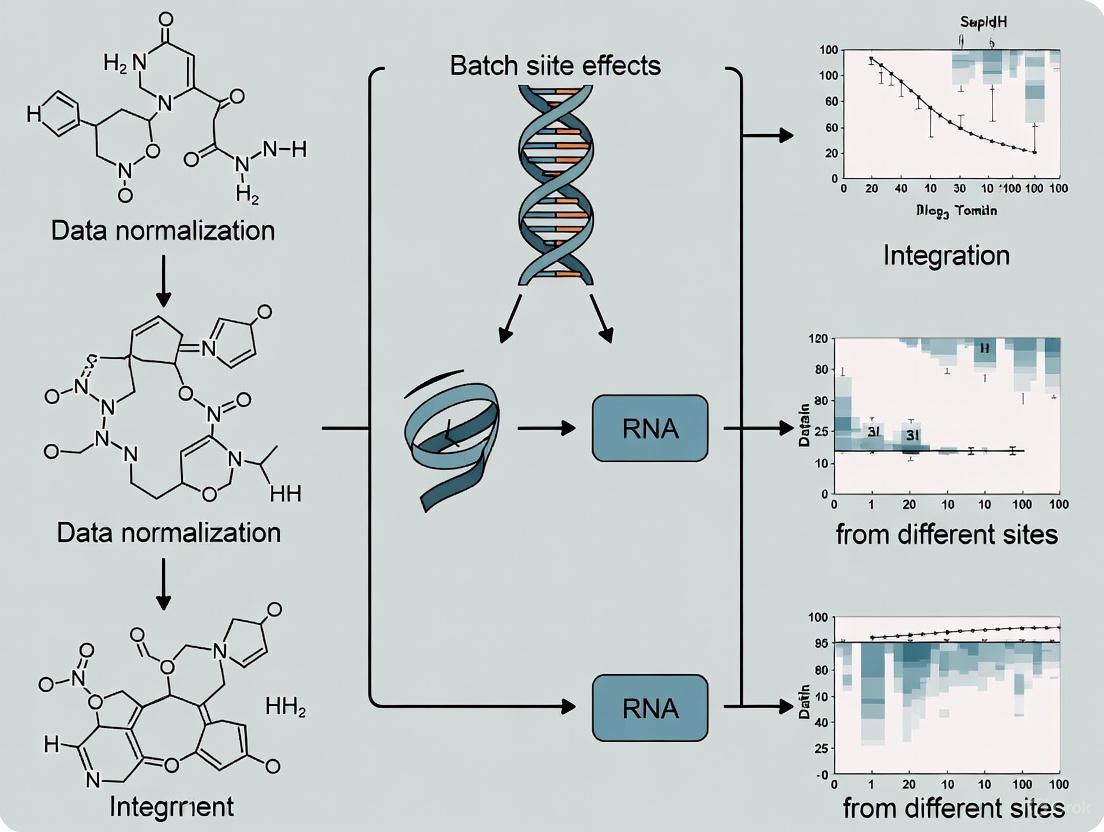

Workflow: From Problem to Solution in Multi-Site Studies

The following diagram illustrates the process of identifying and correcting for batch effects, leading to reliable data integration.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Batch Effect Correction |

|---|---|

| Reference Materials | Well-characterized, stable standards (e.g., certified cell lines, synthetic controls) profiled in every batch to serve as an internal baseline for ratio-based correction methods [2]. |

| Standardized Protocols | Detailed, step-by-step procedures for sample preparation, storage, and data generation to minimize the introduction of technical variation across sites and batches [1]. |

| Control Samples | Samples with known expected outcomes, used to monitor technical performance and identify deviations that may indicate batch effects. |

| Batch Effect Correction Algorithms (BECAs) | Software tools (e.g., ComBat, Harmony, custom ratio-scaling scripts) that statistically adjust the data to remove technical variation while preserving biological signal [2] [4]. |

| 5,6-Epoxyergosterol | 5,6-Epoxyergosterol|High-Purity Reference Standard |

| (5e,7z)-5,7-Dodecadienal | (5E,7Z)-5,7-Dodecadienal|180.29 g/mol |

FAQs: Data Integration & Batch Effects in Multi-Site Embryo Studies

Q1: What is a batch effect, and why is it a critical problem in multi-site embryo studies?

Batch effects are technical variations in data that are not due to the biological subject of study but arise from factors like different labs, equipment, reagent lots, operators, or processing times [6] [7]. In multi-site embryo studies, these effects can severely skew analysis, leading to misleading outcomes, such as a large number of false-positive or false-negative findings [6]. For example, a change in experimental solution can cause shifts in calculated risk, potentially leading to incorrect conclusions or treatment decisions [6]. Batch effects are a major cause of the irreproducibility crisis, raising questions about the reliability of data collected from different batches or platforms [6].

Q2: My multi-omics embryo data comes from different labs. Which batch-effect correction algorithm (BECA) should I use?

The choice of algorithm depends on your experimental design and the type of data you have. Recent large-scale benchmarks have identified several top-performing methods:

- For general multi-omics data (transcriptomics, proteomics, metabolomics): A comprehensive assessment found that the ratio-based method (Ratio-G) is highly effective, especially when batch effects are completely confounded with biological factors of interest. This method involves scaling absolute feature values of study samples relative to those of concurrently profiled reference materials [6].

- For image-based profiling data (e.g., Cell Painting): Benchmarks suggest that Harmony and Seurat RPCA are among the top performers for integrating data across different laboratories and microscopes [7].

- Guidance: If you have a common reference material profiled in every batch, a ratio-based method is highly recommended. For other scenarios, Harmony and Seurat RPCA are strong, computationally efficient choices [6] [7].

Q3: What is a "confounded scenario," and why is it particularly challenging?

A confounded scenario occurs when the biological factor you are studying (e.g., a specific treatment or embryo stage) is completely aligned with the batch. For instance, if all control embryos are processed in Batch 1 and all treated embryos are processed in Batch 2, it becomes nearly impossible to distinguish true biological differences from technical batch variations [6]. In such cases, many standard batch correction methods may fail or even remove the biological signal of interest. The ratio-based method has been shown to be particularly effective in tackling these confounded scenarios [6].

Q4: How common are chromosomal abnormalities in early embryos, and how does this impact data integration?

Chromosomal abnormalities are remarkably common during early embryogenesis. Research indicates that over 70% of fertilized eggs from infertile patients can have chromosome aberrations, which are a primary cause of embryonic lethality and miscarriages [8]. These errors lead to mosaic embryos, where cells with normal genomes coexist with cells exhibiting abnormal genomes [8]. The frequency of these errors is temporarily elevated, with one study pinpointing the 4-cell stage in mouse embryos as a period of particular instability, where 13% of cells showed chromosomal abnormalities [9]. This inherent biological variability adds a significant layer of complexity when integrating data across multiple sites, as technical batch effects must be distinguished from this genuine biological noise.

Troubleshooting Guides

Guide 1: Troubleshooting Batch Effect Correction Failures

| Symptom | Possible Cause | Solution |

|---|---|---|

| Biological signal is lost after correction. | Over-correction in a confounded batch-group scenario. | Apply a ratio-based correction method using a common reference sample profiled in all batches [6]. |

| Poor integration of new data with a existing corrected dataset. | Model-based methods require full re-computation with new data. | Use methods like Harmony or Seurat that can project new data into an existing corrected space, or re-run the correction on the entire combined dataset [7]. |

| Batch effects persist after correction. | Inappropriate method selected for the data type or scenario. | Refer to benchmarking studies: switch to a top-performing method like Harmony or Seurat RPCA for image data [7], or a ratio-based method for multi-omics data [6]. |

| Introduced new artifacts or false patterns in the data. | Over-fitting or incorrect assumptions by the algorithm. | Always visually inspect results (PCA/t-SNE plots) pre- and post-correction. Validate findings with known biological controls. |

Guide 2: Troubleshooting Chromosomal Analysis in Early Embryos

| Symptom | Possible Cause | Solution |

|---|---|---|

| High rates of aneuploidy (abnormal chromosome number) in embryos. | Meiotic errors from the oocyte, which increase with maternal age [8] [10]. | Consider maternal age and oocyte quality as factors. For research, utilize models like the "synthetic oocyte aging" system to study these errors [10]. |

| Mosaic embryos (mix of normal and abnormal cells). | Mitotic errors after fertilization, such as chromosome segregation errors during early cleavages [8] [11]. | Focus on the early cleavage divisions (particularly the 4- to 8-cell transition). Use sensitive single-cell analysis methods like scRepli-seq to detect these errors [9]. |

| Inconsistent results in preimplantation genetic testing (PGT-A). | Technical limitations of PGT-A and the biological reality of mosaicism [8]. | Acknowledge that PGT-A cannot detect all abnormalities. Results should be interpreted with caution by a clinical geneticist. |

Summarized Data & Protocols

Table 1: Performance of Selected Batch Effect Correction Algorithms

| Algorithm | Core Approach | Best For | Key Performance Finding |

|---|---|---|---|

| Ratio-Based (Ratio-G) [6] | Scales feature values relative to a common reference material. | Multi-omics data; Confounded batch-group scenarios. | "Much more effective and broadly applicable than others" in confounded designs [6]. |

| Harmony [7] | Iterative mixture-based correction using PCA. | Image-based profiling; scRNA-seq data. | Consistently ranked among the top three methods; good balance of batch removal and biological signal preservation [7]. |

| Seurat RPCA [7] | Reciprocal PCA and mutual nearest neighbors. | Large, heterogeneous datasets (e.g., from multiple labs). | Consistently ranked among the top three methods; computationally efficient [7]. |

| ComBat [7] | Bayesian framework to model additive/multiplicative noise. | - | Performance is surpassed by newer methods like Harmony and Seurat in several benchmarks [7]. |

Table 2: Key Reagents and Materials for Embryo Data Integration Studies

| Item | Function in Research | Application Note |

|---|---|---|

| Reference Materials (e.g., Quartet Project materials) [6] | Provides a technical baseline for correcting batch effects across labs and platforms. | Should be profiled concurrently with study samples in every batch for ratio-based correction. |

| Cell Painting Assay [7] | A multiplexed image-based profiling assay to capture rich morphological data from cells. | Used to generate high-content data for phenotyping embryo cells under various perturbations. |

| scRepli-seq [8] [9] | A single-cell genomics technique to detect DNA replication timing and chromosomal aberrations. | Critical for identifying chromosome copy number abnormalities and replication stress in single embryonic cells. |

| API-based EMR Integration [12] | Allows AI tools to connect securely with Electronic Medical Record systems. | Enables seamless data flow for AI-driven analysis of embryo images and patient data in clinical workflows. |

Experimental Protocol: Implementing Ratio-Based Batch Effect Correction

Purpose: To effectively remove batch effects in multi-omics studies, especially in confounded scenarios where biological groups are processed in separate batches.

Materials:

- Multi-omics datasets from multiple batches.

- Reference material (e.g., from the Quartet Project [6]) profiled in every batch.

Methodology:

- Experimental Design: In each batch of your study, concurrently profile your study samples alongside one or more aliquots of a well-characterized reference material.

- Data Generation: Process all samples (study and reference) using the same platform and protocol within a batch.

- Ratio Calculation: For each feature (e.g., gene expression, protein abundance) in each study sample, calculate a ratio value. This is done by dividing the absolute feature value of the study sample by the corresponding value from the reference material profiled in the same batch.

Ratio = Feature_value_study_sample / Feature_value_reference_material - Data Integration: Use the resulting ratio-based values for all downstream analyses and data integration across batches. This scaling effectively normalizes out batch-specific technical variations [6].

Visual Workflows & Diagrams

Batch Effect Correction Workflow

Embryo Aneuploidy Origins

For researchers in multi-site embryo studies, the pursuit of reproducible, high-impact findings is often confounded by a pervasive technical challenge: batch effects. These are technical sources of variation introduced when samples are processed in different batches, across different laboratories, by different personnel, or at different times. In multi-center research, where collaboration and large sample sizes are essential, failing to account for batch effects can lead to misleading conclusions and irreproducibility. This guide presents real-world case studies and data to illustrate the profound consequences of batch effects and provides a toolkit for their identification and correction.

FAQs: Understanding the Impact of Batch Effects

What are batch effects and why are they a critical concern in multi-site studies?

Batch effects are technical, non-biological variations in data that are introduced by differences in experimental conditions [13] [14]. These can arise from a multitude of sources, including:

- Different sequencing runs or instruments

- Variations in reagent lots

- Changes in sample preparation protocols

- Different personnel handling the samples

- Experiments conducted over multiple days or weeks [15]

In multi-site embryo studies, where samples are processed across different laboratories, these effects are magnified. They are a critical concern because they can confound biological signals, making it difficult or impossible to distinguish true biological differences from technical artifacts. This can lead to increased variability, reduced statistical power, and, in the worst cases, incorrect conclusions [16] [17].

Can batch effects truly lead to retracted papers and misdirected clinical decisions?

Yes, the impact of batch effects can be severe and far-reaching. Published case studies demonstrate serious consequences:

- Clinical Misclassification: In a clinical trial, a change in the RNA-extraction solution introduced a batch effect in gene expression profiles. This resulted in an incorrect gene-based risk calculation, leading to 162 patients being misclassified, 28 of whom subsequently received incorrect or unnecessary chemotherapy regimens [16].

- Article Retraction: A high-profile study published a novel fluorescent serotonin biosensor. The authors later discovered that the biosensor's sensitivity was highly dependent on the batch of fetal bovine serum (FBS) used. When the FBS batch changed, the key results could not be reproduced, leading to the retraction of the article [16].

- Spurious Biological Findings: One analysis suggested that gene expression differences between human and mouse species were greater than the differences between tissues within the same species. A re-analysis revealed that the data from the two species were generated three years apart. After batch effect correction, the data clustered by tissue type rather than by species, indicating the initial conclusion was an artifact of batch effects [16].

What is the largest source of technical variability in multi-laboratory cell phenotyping studies?

In a systematic multi-site assessment of reproducibility in high-content cell phenotyping, the largest source of technical variability was found to be laboratory-to-laboratory variation [18].

This study involved five laboratories using an identical protocol and key reagents to generate live-cell imaging data on cell migration. A Linear Mixed Effects (LME) model was used to quantify variability at different hierarchical levels. While biological variability (between cells and over time) was substantial, technical variability contributed a median of 32% of the total variance across all measured variables. Within this technical variability, the lab-to-lab component was the most significant, followed by variability between persons, experiments, and technical replicates [18].

The study further showed that simply combining data from different labs without correction almost doubled the cumulative technical variability [18].

How can I visually identify batch effects in my own data?

Before attempting correction, it is crucial to diagnose the presence of batch effects. Several common visualization methods can help:

- Principal Component Analysis (PCA): Perform PCA on your raw data and color the data points by batch. If the samples cluster strongly based on their batch rather than their biological condition (e.g., treatment vs. control), it indicates a strong batch effect [19] [20] [15].

- t-SNE or UMAP Plots: These dimensionality reduction techniques can also reveal batch effects. In the presence of a batch effect, cells or samples from the same batch will cluster together, even if they are from different biological groups [19] [20].

The diagram below illustrates the logical workflow for diagnosing and addressing batch effects.

What are the signs that my batch effect correction may have been too aggressive (over-correction)?

Over-correction occurs when batch effect removal algorithms also remove genuine biological signal. Key signs include:

- Distinct Cell Types Cluster Together: On a PCA or UMAP plot, distinct biological cell types that should form separate clusters are merged into one [19] [20].

- Loss of Expected Markers: Canonical cell-type-specific markers (e.g., known markers for a specific T-cell subtype) are absent from differential expression analysis after correction [19].

- Poor Marker Quality: A significant portion of the genes identified as cluster-specific markers are housekeeping genes, like ribosomal genes, which are broadly expressed across cell types and lack biological specificity [19] [20].

- Complete Overlap of Different Conditions: After correction, samples from very different biological conditions or experiments show a complete overlap, which is biologically implausible [20].

Case Study: Multi-Site Assessment of Cell Migration

Experimental Protocol

A landmark study designed to quantify sources of variability in high-content imaging involved three independent laboratories [18].

- Objective: To determine the sources of variability (biological and technical) in live-cell imaging data of migrating cancer cells.

- Cell Line: HT1080 fibrosarcoma cells, stably expressing fluorescent labels (LifeActâ€mCherry and H2Bâ€EGFP).

- Standardization: A detailed common protocol, the cell line, and all key reagents were distributed to all participating labs to minimize biological and technical variance.

- Nested Design: The experiment followed a nested structure with three laboratories, three persons per lab, three independent experiments per person, two conditions (control and ROCK inhibitor) per experiment, and three technical replicates per condition.

- Imaging & Analysis: Automated fluorescent microscopes with environmental chambers were used. All microscope-derived images were transferred to a single laboratory for uniform processing and quantification using CellProfiler and custom Matlab scripts [18].

Key Findings and Quantitative Data

The study used a Linear Mixed Effects (LME) model to partition the variance for 18 different cell morphology and migration variables. The table below summarizes the median proportion of total variance attributed to each source.

Table 1: Sources of Variance in Multi-Site Cell Phenotyping Data [18]

| Source of Variance | Type | Median Proportion of Total Variance |

|---|---|---|

| Between Laboratories | Technical | Major Source |

| Between Persons | Technical | Moderate Source |

| Between Experiments | Technical | Minor Source |

| Between Technical Replicates | Technical | Minor Source |

| Between Cells (within a population) | Biological | Substantial |

| Within Cells (over time) | Biological | Substantial |

Key Conclusion: Despite rigorous standardization, laboratory-to-laboratory variation was the dominant technical source of variability. This prevented high-quality meta-analysis of the primary data. However, the study also found that batch effect removal methods could markedly improve the ability to combine datasets from different laboratories for perturbation analyses [18].

The Scientist's Toolkit

Research Reagent Solutions

Table 2: Essential Materials for Batch Effect Monitoring and Correction

| Item | Function in Batch Effect Management |

|---|---|

| Quality Control Standards (QCS) | A standardized reference material (e.g., a tissue-mimicking gelatin matrix with a controlled analyte like propranolol) run alongside experimental samples to monitor technical variation across slides, days, and laboratories [21]. |

| Common Cell Line | Using an identical, stable cell line across all sites (e.g., HT1080 fibrosarcoma as in the case study) minimizes biological variability, allowing researchers to isolate technical batch effects [18]. |

| Common Reagent Lots | Distributing aliquots from the same lot of key reagents (e.g., fetal bovine serum, collagen, enzymes) to all participating labs prevents reagent-based variability [18] [16]. |

| Detailed Common Protocol | A single, rigorously detailed experimental protocol ensures consistency in sample handling, preparation, and imaging across all personnel and sites [18]. |

| Emeguisin A | Emeguisin A|Depsidone |

| Nanangenine H | Nanangenine H |

Computational Correction Methods

A wide array of computational tools exists to correct for batch effects. The choice of method depends on the data type (e.g., bulk RNA-seq, single-cell RNA-seq, proteomics) and the experimental design.

Table 3: Common Batch Effect Correction Algorithms

| Method | Brief Description | Common Use Cases |

|---|---|---|

| ComBat / ComBat-seq | Uses an empirical Bayes framework to adjust for batch effects. ComBat-seq is designed specifically for raw count data from RNA-seq [22] [15]. | Bulk RNA-seq, Microarray, Proteomics |

limma (removeBatchEffect) |

Uses a linear model to remove batch effects from normalized expression data [22] [15]. | Bulk RNA-seq, Microarray |

| Harmony | Iteratively clusters cells across batches and corrects them, maximizing diversity within each cluster. Known for its speed and efficiency [19] [14] [20]. | Single-cell RNA-seq |

| Seurat Integration | Uses Canonical Correlation Analysis (CCA) and mutual nearest neighbors (MNNs) to find "anchors" between datasets for integration [19] [14] [20]. | Single-cell RNA-seq |

| Mutual Nearest Neighbors (MNN) | Identifies pairs of cells that are nearest neighbors in each batch and uses them to infer the batch correction vector [19] [14]. | Single-cell RNA-seq |

| BERT | A high-performance, tree-based framework for integrating large-scale, incomplete omics datasets, leveraging ComBat or limma at each node of the tree [22]. | Large-scale multi-omics |

The following diagram illustrates how a tool like BERT hierarchically integrates data from multiple batches.

Best Practices Workflow

To effectively manage batch effects in multi-site embryo studies, a proactive and comprehensive strategy is required.

Troubleshooting Guide: Common Batch-Effect Correction Issues

1. Problem: Loss of Differential Expression Signals After Correction

- Why it happens: Many batch-effect correction methods over-correct the data, removing subtle but biologically meaningful expression differences along with technical variations [4] [16].

- Solution: Implement an order-preserving correction method. These methods, often based on monotonic deep learning networks, are specifically designed to maintain the original ranking of gene expression levels within each cell, thereby protecting differential expression patterns [4].

- Check: Compare the Spearman correlation of gene expression rankings before and after correction. A method that preserves order will show a high correlation coefficient [4].

2. Problem: Distorted Inter-Gene Correlations

- Why it happens: Methods focused solely on cell alignment across batches can disrupt the co-expression relationships between genes, which are crucial for understanding regulatory networks [4].

- Solution: Choose algorithms that incorporate the preservation of inter-gene correlation into their objective function. Evaluate the retention of significantly correlated gene pairs post-correction using Pearson and Kendall correlation metrics [4].

- Check: For a given cell type, identify significantly correlated gene pairs in the original batches. Calculate the root mean square error (RMSE) of these correlations after correction; lower values indicate better preservation [4].

3. Problem: Poor Integration of Datasets with High Missing Value Rates

- Why it happens: Incomplete omic profiles (e.g., from proteomics or metabolomics) are common in multi-site studies. Standard correction tools may fail or introduce significant data loss when faced with many missing values [22].

- Solution: Use a framework designed for incomplete data, such as Batch-Effect Reduction Trees (BERT). BERT decomposes the integration task into a binary tree, allowing it to handle features that are missing completely in some batches without the massive data loss associated with other methods [22].

- Check: Monitor the percentage of retained numeric values after correction. Methods like BERT are designed to retain all non-missing values, whereas others can lose over 50% of the data in high-missingness scenarios [22].

4. Problem: Inability to Handle Imbalanced or Confounded Study Designs

- Why it happens: Batch effects can be confounded with biological outcomes of interest (e.g., if all controls were processed in one batch and all cases in another). Standard correction cannot distinguish these technical from biological effects [16].

- Solution: Leverage methods that allow for the specification of covariates and reference samples. Providing this information helps the algorithm model and preserve biological conditions. BERT, for instance, allows users to define samples with known covariates as references to guide the correction of unknown samples [22].

- Check: Always visualize the data using UMAP or t-SNE colored by both batch and biological condition before correction. If they are perfectly confounded, statistical correction is risky, and a design-based solution is preferable [16].

5. Problem: Over-Correction Leading to the Loss of Rare Cell Types

- Why it happens: Procedural correction methods that separate the correction step from clustering can inadvertently remove subtle biological signals, such as those from small populations of rare cells [4].

- Solution: Consider methods that integrate batch-effect correction with cell clustering, or employ metrics like the Local Inverse Simpson's Index (LISI) to evaluate both batch mixing (high LISI) and cell-type purity (low LISI) after correction [4].

- Check: After correction, verify that the clusters corresponding to known rare cell types still contain a representative number of cells and exhibit expected marker gene expression [4].

Frequently Asked Questions (FAQs)

Q1: What does "order-preserving" mean in the context of batch-effect correction, and why is it critical for my analysis? A1: "Order-preserving" refers to a correction method's ability to maintain the original relative rankings of gene expression levels within each cell or batch after processing [4]. This is critical because the relative abundance of transcripts, not just their presence or absence, drives biological interpretation. Disrupting this order can lead to false conclusions in downstream analyses like differential expression or pathway enrichment studies [4].

Q2: How can I quantitatively assess if my batch-effect correction has successfully preserved biological signals? A2: You should use a combination of metrics to get a complete picture [4]:

- For Batch Mixing: Use the Local Inverse Simpson's Index (LISI). A higher LISI score indicates better mixing of batches.

- For Cell-Type Purity: Use the Average Silhouette Width (ASW) with respect to biological labels. A higher ASW indicates cells of the same type are more compact and distinct from other types.

- For Biological Structure: Use the Adjusted Rand Index (ARI) to compare clustering results before and after correction.

- For Gene Relationships: Calculate the correlation (e.g., Pearson) of inter-gene correlations before and after correction [4].

Q3: My multi-site embryo study has severe data incompleteness (many missing values). Which correction methods are suitable? A3: Traditional methods struggle with this, but the BERT (Batch-Effect Reduction Trees) framework is specifically designed for integrating incomplete omic profiles [22]. Unlike other methods that can lose up to 88% of numeric values when blocking batches, BERT's tree-based approach retains all non-missing values, making it highly suitable for sparse data from embryo studies [22].

Q4: Are there trade-offs between effectively removing batch effects and preserving the biological truth of my data? A4: Yes, this is a fundamental challenge. Overly aggressive correction can remove biological variation along with batch effects, a phenomenon known as "over-correction" [16]. This is why choosing a method with features like order-preservation and correlation-maintenance is crucial, as they are explicitly designed to minimize this trade-off by protecting intrinsic biological patterns during the correction process [4].

Quantitative Data on Correction Method Performance

The table below summarizes key performance metrics for several batch-effect correction methods, highlighting the importance of specialized features.

Table 1: Comparison of Batch-Effect Correction Method Performance

| Method / Feature | Preserves Gene Order? | Retains Inter-Gene Correlation? | Handles Incomplete Data? | Key Performance Metric |

|---|---|---|---|---|

| ComBat [4] | Yes [4] | Moderate [4] | No (requires complete matrix) | Good for basic correction, but hampered by scRNA-seq sparsity [4]. |

| Harmony [4] | Not Applicable (output is embedding) [4] | Not Evaluated | No | Effective for cell alignment and visualization [4]. |

| Seurat v3 [4] | No [4] | No [4] | No | Good cell-type clustering, but can distort gene-gene correlations [4]. |

| MMD-ResNet [4] | No [4] | No [4] | No | Uses deep learning for distribution alignment [4]. |

| Order-Preserving Method (Global) [4] | Yes [4] | High [4] | No | Superior in maintaining Spearman correlation and differential expression signals [4]. |

| BERT [22] | Not Specified | Not Specified | Yes [22] | Retains >99% of numeric values vs. up to 88% loss with other methods on 50% missing data [22]. |

Table 2: Evaluation Metrics for Biological Signal Preservation

| Metric | What it Measures | Ideal Outcome | How to Calculate |

|---|---|---|---|

| Spearman Correlation [4] | Preservation of gene expression ranking before vs. after correction. | Coefficient close to 1. | Non-parametric correlation of expression values for each gene. |

| Inter-Gene Correlation RMSE [4] | Preservation of correlation structure between gene pairs. | Low RMSE value. | Root Mean Square Error of Pearson correlations for significant gene pairs before and after correction [4]. |

| ASW (Biological Label) [4] [22] | Compactness of biological groups (e.g., cell types). | Value close to 1. | ( ASW = \frac{1}{N} \sum{i=1}^{N} \frac{bi - ai}{\max(ai, bi)} ) where (ai) is mean intra-cluster distance and (b_i) is mean nearest-cluster distance for cell (i) [22]. |

| LISI (Batch) [4] | Diversity of batches in local neighborhoods (batch mixing). | High score. | Inverse Simpson's index calculated for each cell's local neighborhood. |

Experimental Protocol: Evaluating an Order-Preserving Correction

This protocol outlines the steps to assess a batch-effect correction method's performance in a multi-site embryo study context.

Objective: To validate that a batch-effect correction method successfully removes technical variation while preserving the order of gene expression and inter-gene correlation structure.

Input: A raw, merged gene expression matrix (cells x genes) from multiple batches (sites/labs), with associated metadata for batch ID and known biological labels (e.g., embryo developmental stage).

Procedure:

Preprocessing and Initial Clustering:

- Apply standard scRNA-seq preprocessing (normalization, log-transformation, highly variable gene selection).

- Perform initial cell clustering on the uncorrected data using a graph-based method (e.g., Seurat's FindClusters) to establish a baseline for cell-type identification [4].

Application of Batch-Effect Correction:

Quantitative Evaluation of Order and Correlation Preservation:

- Gene Order Preservation:

- For each batch, select a major cell type and a rare cell type.

- For each gene, calculate the Spearman correlation coefficient between its raw (non-zero) expression values and its corrected values.

- Summarize the distribution of these correlations (e.g., median, IQR) for each method. A method that preserves order will have a distribution centered near 1 [4].

- Inter-Gene Correlation Preservation:

- For a stable cell type present in multiple batches, identify gene pairs that are significantly correlated (same direction, FDR-adjusted p-value < 0.05) in the raw data of both batches [4].

- Calculate the Pearson correlation for these gene pairs in the corrected data.

- Compute the RMSE between the pre- and post-correlation values for these pairs. A lower RMSE indicates better preservation [4].

- Gene Order Preservation:

Visualization and Final Assessment:

- Generate UMAP plots colored by batch and by biological label for both the raw and corrected data.

- A successful correction will show mixed batches but distinct, well-separated biological clusters.

- Compare the quantitative metrics from Step 3 across methods to select the one that best preserves biological truth.

The workflow for this protocol is summarized in the following diagram:

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Computational Tools and Resources

| Item | Function / Purpose | Example / Note |

|---|---|---|

| Order-Preserving Algorithm | A correction method that uses a monotonic deep learning network to maintain the original ranking of gene expression values, crucial for protecting differential expression signals [4]. | The "global monotonic model" described in [4]. |

| BERT Framework | A high-performance, tree-based data integration method for incomplete omic profiles. It minimizes data loss and can handle severely imbalanced conditions using covariates and references [22]. | Available as an R package from Bioconductor [22]. |

| Reference Samples | A set of samples with known biological covariates (e.g., a specific embryo stage) processed across multiple batches. Used to guide the correction of unknown samples and account for design imbalance [22]. | For example, include two samples of a known cell type in every batch to anchor the correction [22]. |

| Covariate Metadata | Structured information (e.g., in a .csv file) detailing the batch ID, biological condition, and other relevant factors (e.g., donor sex) for every sample. Essential for informing correction algorithms what variation to preserve [22]. | Must be complete and accurately linked to each sample in the expression matrix. |

| Quality Control Metrics (ASW, LISI, ARI) | A set of standardized metrics to quantitatively evaluate the success of integration, balancing batch removal against biological preservation [4] [22]. | Use ASW on biological labels and LISI on batch ID for a balanced view [4]. |

| Rhamnitol | Rhamnitol, CAS:1114-16-5, MF:C6H14O5, MW:166.17 g/mol | Chemical Reagent |

| Azukisaponin VI | Azukisaponin VI, CAS:82801-39-6, MF:C54H86O25, MW:1135.2 g/mol | Chemical Reagent |

The logical relationship between the key components of a successful batch-effect correction strategy is shown below:

A Practical Toolkit: Batch Effect Correction Methods for Single-Cell and Spatial Embryo Data

In multi-site embryo studies, the integration of data from different labs, protocols, and points in time is essential for robust biological discovery. However, this integration is challenged by batch effects—systematic technical variations that can obscure true biological signals. This guide provides a technical deep dive into four prominent batch-effect correction algorithms, offering troubleshooting advice and protocols to empower your research.

Core Algorithm Principles and Troubleshooting FAQs

What are the fundamental differences in how these algorithms work?

The core batch-effect correction methods differ significantly in their underlying mathematical approaches and the scenarios for which they are best suited.

| Algorithm | Core Principle | Primary Data Type | Key Assumption |

|---|---|---|---|

| ComBat | Empirical Bayes framework to adjust for known batch variables by modeling and shrinking batch effect estimates. [4] [23] [24] | Bulk RNA-seq, Microarrays | Batch effects are consistent across genes; population composition is similar across batches. [25] |

| limma | Linear modeling to remove batch effects as a covariate in the design matrix, without altering the raw data for downstream testing. [24] | Bulk RNA-seq, Microarrays | Batch effects are additive and known in advance. [23] |

| Harmony | Iterative clustering in PCA space with soft clustering and a diversity penalty to maximize batch mixing. [26] [3] | scRNA-seq, Multi-omics | Biological variation can be separated from technical batch variation in a low-dimensional space. [26] |

| MNN Correct | Identifies Mutual Nearest Neighbors (pairs of cells of the same type across batches) to estimate and correct cell-specific batch vectors. [26] [25] | scRNA-seq | A subset of cell populations is shared between batches; batch effect is orthogonal to biological subspace. [25] |

| Cryptolepinone | Cryptolepinone, CAS:160113-29-1, MF:C16H12N2O, MW:248.28 g/mol | Chemical Reagent | Bench Chemicals |

| Glycoside H2 | Glycoside H2, CAS:73529-43-8, MF:C56H92O25, MW:1165.3 g/mol | Chemical Reagent | Bench Chemicals |

Algorithm Workflow Selection

How do I choose between ComBat and limma's removeBatchEffect for my bulk transcriptomics data?

The choice hinges on whether you need to correct the data matrix for visualization or include batch in your statistical model for differential expression.

- Use

limma removeBatchEffectfor exploratory analysis and visualization: This function is ideal for creating PCA plots or heatmaps where you want to remove the batch effect to see the underlying biological structure more clearly. It works by fitting a linear model that includes your batch as a covariate and then removes its effect. Critically, the original data for differential testing remains unchanged; batch is included as a covariate in the final model. [24] - Use ComBat for a powerful correction when batch is known: ComBat uses an empirical Bayes approach to shrink the batch effect estimates towards the overall mean, which is particularly beneficial when you have many batches or small sample sizes per batch. This makes it robust, but it directly modifies your data. [4] [24] [25] A key limitation is that it assumes the composition of cell populations is the same across batches, which can lead to overcorrection if this is not true. [25]

- Best Practice Recommendation: For differential expression analysis, the most reliable method is often to include 'batch' as a covariate in your statistical model (e.g., in

DESeq2orlimma) without pre-correcting the data with ComBat orremoveBatchEffect. This approach models the effect of batch without altering the raw counts, reducing the risk of introducing artifacts. [24]

My data has different cell type compositions across batches. Which method should I use to avoid overcorrection?

This is a common challenge where bulk methods like ComBat and limma fail, as they assume uniform cell type composition. In this scenario, methods designed for single-cell data are superior.

- The Problem: If you use ComBat on data where one cell type is only present in one batch, the algorithm will incorrectly interpret the unique gene expression profile of that cell type as a batch effect and attempt to remove it, thereby erasing true biological variation. [25]

- Recommended Solution: MNN Correct or Harmony. These methods are explicitly designed to handle differing cell type compositions. [26] [25]

- MNN Correct works by identifying "mutual nearest neighbors"—pairs of cells from different batches that are most similar to each other. It assumes these pairs represent the same cell type and uses them to estimate the batch effect, which is then applied to all cells. This allows it to correct only the shared cell populations without forcing all populations to align. [25]

- Harmony operates in a PCA space and iteratively clusters cells while applying a penalty that encourages each cluster to include cells from multiple batches. This allows it to successfully integrate batches even when cell type abundances vary significantly. [26]

- Troubleshooting Tip: To diagnose overcorrection, use the RBET (Reference-informed Batch Effect Testing) framework. It uses reference genes (e.g., housekeeping genes) with stable expression to evaluate correction quality. A good correction should show low RBET values, while overcorrection will cause the value to rise again as biological signal is degraded. [27]

I am working with a confounded study design where my biological groups are processed in completely separate batches. Is correction even possible?

This is one of the most difficult scenarios, as biological and technical effects are perfectly correlated. Most standard methods will fail, as they cannot distinguish biology from batch.

- The Challenge: In a confounded design (e.g., all control samples in Batch 1 and all treatment samples in Batch 2), any attempt to remove the "batch effect" will also remove the biological differences you are trying to study. [2]

- Advanced Solution: Ratio-Based Scaling with Reference Materials. The most robust solution is to use a strategy that relies on external controls. If you profile a common reference material (e.g., a standardized control sample or pooled sample) in every batch, you can transform your data into ratios relative to that reference. [28] [2]

- Protocol: For each gene in each sample, calculate

Ratio = Expression_in_Study_Sample / Expression_in_Reference_Material. This scales all batches to a common baseline, effectively removing the batch-specific technical variation and revealing the true biological differences between groups, even in confounded designs. [2]

- Protocol: For each gene in each sample, calculate

- Application Note: This ratio-based method (Ratio-G) has been shown to be "much more effective and broadly applicable than others" in confounded scenarios for multi-omics data, including transcriptomics and proteomics. [2]

After using Harmony, I no longer have a full gene expression matrix for differential expression. What went wrong?

This is not an error but a fundamental characteristic of how some modern batch-correction methods operate.

- Understanding Output Types: Methods like Harmony and fastMNN perform correction in a low-dimensional space (e.g., after PCA). Their output is an integrated embedding, not a corrected count matrix for all genes. This embedding is excellent for visualization, clustering, and cell type annotation but cannot be used directly for standard differential expression tests on genes. [27] [26]

- Alternative Workflows:

- For DE analysis after Harmony integration: First, use the corrected embedding to identify cell populations or clusters. Then, perform differential expression testing using the original, uncorrected counts, but use the cell clusters or states identified from the integrated data as the biological variable of interest.

- Choose a method that returns a matrix: If your workflow requires a corrected gene expression matrix, select a method that provides one, such as ComBat, MNN Correct, limma's removeBatchEffect, or Scanorama. [27] [26]

Essential Research Reagent Solutions

The following reagents and computational resources are critical for implementing the protocols discussed above.

| Reagent / Resource | Function in Batch-Effect Correction | Example Use Case |

|---|---|---|

| Reference Materials | Provides a technical baseline for ratio-based correction methods. Enables correction in confounded study designs. [2] | Quartet Project reference materials (D5, D6, F7, M8) for multi-omics data. [28] [2] |

| Housekeeping Gene Panel | Serves as biologically stable reference genes for evaluating overcorrection (e.g., in the RBET framework). [27] | Pancreas-specific housekeeping genes for validating batch correction in pancreas cell data. [27] |

| Precision Biological Samples | Technical replicates across batches to assess correction performance via metrics like CV or SNR. [28] [2] | Triplicates of donor samples within each batch in the Quartet datasets. [2] |

Performance Evaluation Protocol

To quantitatively evaluate the success of any batch-effect correction method in your embryo study, implement the following protocol using a combination of metrics.

Step 1: Assess Batch Mixing

- Metric: Local Inverse Simpson's Index (LISI). LISI measures the diversity of batches in the local neighborhood of each cell. A higher LISI score indicates better mixing of batches. [27] [26]

- Metric: k-nearest neighbor Batch Effect Test (kBET). kBET tests if the local batch distribution around each cell matches the global distribution. A lower rejection rate indicates successful local mixing. [27] [26]

Step 2: Assess Biological Signal Preservation

- Metric: Adjusted Rand Index (ARI). ARI compares the cell clustering results before and after integration. A high ARI indicates that the biological cell type identities have been preserved. [4] [26]

- Metric: Average Silhouette Width (ASW). ASW evaluates the compactness and separation of cell type clusters. A high ASW indicates that cell types remain well-defined after correction. [4] [26]

- Metric: Reference-informed Batch Effect Test (RBET). This newer metric uses reference genes to evaluate correction quality and is uniquely sensitive to overcorrection, making it highly valuable. [27]

The table below summarizes the ideal outcomes for a successful correction.

| Evaluation Aspect | Key Metric | Target Outcome |

|---|---|---|

| Batch Mixing | LISI [26] | High Score |

| kBET rejection rate [26] | Low Score | |

| Biology Preservation | ARI [4] | High Score |

| ASW (cell type) [4] | High Score | |

| Overcorrection Awareness | RBET [27] | Biphasic (Optimal mid-range) |

Correction Evaluation Workflow

Troubleshooting Guides and FAQs

Frequently Asked Questions

Q1: What are the primary technical challenges when integrating incomplete omic data from multiple research sites? Integrating incomplete omic data from multiple sites presents two core challenges: batch effects (technical variations from different labs, protocols, or instruments that can confound biological signals) and data incompleteness (missing values common in high-throughput omic technologies). These issues are particularly pronounced in multi-site studies where biological and technical factors are often confounded, making it difficult to distinguish true biological signals from technical artifacts [22] [16] [6].

Q2: My data has different covariates distributed unevenly across batches. Can BERT handle this? Yes. BERT allows specification of categorical covariates (e.g., biological conditions) and can model these conditions using modified design matrices in its underlying algorithms (ComBat and limma). This preserves covariate effects while removing batch effects, which is crucial for severely imbalanced or sparsely distributed conditions [22].

Q3: How does BERT's performance compare to HarmonizR when dealing with large datasets? BERT demonstrates significant performance advantages over HarmonizR. In simulation studies with up to 50% missing values, BERT retained all numeric values, while HarmonizR's "unique removal" strategy led to substantial data loss (up to 88% for blocking of 4 batches). BERT also showed up to 11× runtime improvement by leveraging multi-core and distributed-memory systems [22].

Q4: What should I do when my phenotype of interest is completely confounded with batch? In fully confounded scenarios where biological groups separate completely by batch, standard correction methods may fail. The most effective approach is using a ratio-based method with reference materials. By scaling feature values of study samples relative to concurrently profiled reference materials in each batch, you can effectively distinguish biological from technical variations [6].

Q5: Are there scenarios where batch effect correction should not be applied? Yes, caution is needed when batch effects are minimal or when over-correction might remove biological signals. Always assess batch effect severity using metrics like Average Silhouette Width (ASW) before correction. Visualization techniques (PCA, t-SNE) should show batch mixing improvement while preserving biological group separation after correction [13].

Common Experimental Issues and Solutions

Problem: High data loss after running HarmonizR with default settings.

- Cause: HarmonizR's default "unique removal" strategy introduces additional data loss by removing features with insufficient values across batches [22].

- Solution: Consider using BERT instead, which retains all numeric values by propagating features with values from only one batch to the next correction level. If using HarmonizR, explore different blocking strategies, though this may still result in significant data loss [22].

Problem: Batch correction removes my biological signal of interest.

- Cause: This typically occurs in confounded designs where biological groups correlate perfectly with batches. Most algorithms cannot distinguish biological from technical variation in this scenario [6] [13].

- Solution: Implement a reference-based design using ratio scaling (Ratio-G). Profile reference materials alongside study samples in each batch, then transform expression data relative to reference values. This preserves biological differences while removing technical variations [6].

Problem: Unexpected clustering by processing date rather than biological group.

- Cause: Batch effects from temporal variations (different sequencing runs, reagent lots, or operators) are common, even within the same laboratory [16] [13].

- Solution: Include processing date as a batch variable in correction algorithms. For future experiments, balance biological groups across processing dates and use reference materials for longitudinal quality control [16].

Problem: Algorithm fails with "insufficient replicates" error.

- Cause: ComBat and limma (used by both BERT and HarmonizR) require at least two numerical values per feature per batch [22].

- Solution: BERT automatically handles this by removing singular numerical values (typically <1% of values) and propagating single-batch features. Ensure your data meets minimum requirements: each feature should have sufficient representation in at least some batches [22].

Performance Comparison: BERT vs. HarmonizR

Table 1: Quantitative comparison of BERT and HarmonizR performance characteristics

| Performance Metric | BERT | HarmonizR (Full Dissection) | HarmonizR (Blocking of 4) |

|---|---|---|---|

| Data Retention | Retains all numeric values | Up to 27% data loss with 50% missing values | Up to 88% data loss with 50% missing values |

| Runtime Improvement | Up to 11× faster (vs. HarmonizR) | Baseline | Varies by blocking strategy |

| Covariate Handling | Supports categorical covariates and reference samples | Limited capabilities | Limited capabilities |

| ASW Improvement | Up to 2× improvement for imbalanced conditions | Standard performance | Standard performance |

| Parallelization | Multi-core and distributed-memory systems | Embarrassingly parallel sub-matrices | Block-based parallelization |

Table 2: Algorithm suitability for different experimental scenarios

| Experimental Scenario | Recommended Tool | Key Considerations |

|---|---|---|

| Highly incomplete data (>30% missing values) | BERT | Superior data retention; preserves more features for analysis |

| Balanced batch-group design | Either tool | Both perform well when biological groups evenly distributed across batches |

| Confounded batch-group design | BERT with reference samples | Use covariate handling; ratio-based scaling recommended |

| Large-scale datasets (>1000 samples) | BERT | Better scalability and parallelization capabilities |

| Limited computational resources | HarmonizR with blocking | Reduced memory footprint with batch grouping |

| Unknown covariate levels | BERT with reference designation | Can estimate effects from references, apply to non-references |

Experimental Protocols

Protocol 1: Implementing BERT for Multi-Site Embryo Omic Data

Principle: BERT decomposes data integration into a binary tree of batch-effect correction steps, using ComBat or limma for features with sufficient data while propagating single-batch features [22].

Step-by-Step Procedure:

- Input Preparation: Format data as data.frame or SummarizedExperiment object. Ensure samples are annotated with batch information and biological covariates [22].

- Parameter Configuration: Set parallelization parameters (P = number of processes, R = reduction factor, S = sequential processing threshold). Default values typically suffice for initial runs [22].

- Reference Specification: Designate samples with known covariates as references. BERT will use these to estimate batch effects while preserving biological signals [22].

- Algorithm Execution: Run BERT using established Bioconductor implementation. Monitor progress through quality control outputs [22].

- Output Validation: Verify integration using Average Silhouette Width (ASW) scores. Compare pre- and post-integration values for both batch and biological labels [22].

Protocol 2: Reference-Based Ratio Scaling for Confounded Designs

Principle: Transform absolute feature values to ratios relative to concurrently profiled reference materials, effectively separating biological from technical variations [6].

Step-by-Step Procedure:

- Reference Selection: Choose appropriate reference materials that represent the biological system under study. For embryo research, this might include pooled samples or well-characterized reference cell lines [6].

- Concurrent Profiling: Process reference materials alongside study samples in every batch, maintaining consistent processing protocols across sites [6].

- Ratio Calculation: For each feature in every sample, calculate ratio = samplevalue / referencevalue. Use median scaling when multiple reference replicates are available [6].

- Data Integration: Proceed with integrated analysis using ratio-scaled data. Biological signals will be preserved while batch-specific technical variations are minimized [6].

Research Reagent Solutions

Table 3: Essential materials for robust multi-omics batch effect correction

| Reagent/Material | Function in Batch Correction | Implementation Considerations |

|---|---|---|

| Reference Materials | Enables ratio-based scaling; monitors technical variation | Select materials biologically relevant to study system; ensure long-term availability |

| Quality Control Metrics | Quantifies batch effect severity and correction success | Implement ASW, PCA visualization, and signal-to-noise ratios |

| Covariate Annotation | Preserves biological effects during technical correction | Comprehensive sample metadata collection; standardized formatting |

| Multiomics Standards | Facilitates integration across different data types | Use consortium-developed standards (Quartet Project materials) |

| Computational Resources | Enables processing of large-scale datasets | High-performance computing environment; adequate memory allocation |

Validation and Quality Control

Pre- and Post-Correction Assessment

Visualization: Generate PCA and t-SNE plots colored by both batch and biological groups before and after correction. Successful correction shows batches mixing while biological groups remain distinct [13].

Quantitative Metrics:

- Average Silhouette Width (ASW): Measures separation between batches (ASWbatch) and biological groups (ASWlabel). Effective correction decreases ASWbatch while maintaining or increasing ASWlabel [22].

- Signal-to-Noise Ratio (SNR): Quantifies biological signal preservation after correction [6].

- Differential Expression Analysis: Compare results before and after correction; credible correction should yield biologically plausible findings [6].

Implementation in Multi-Site Embryo Studies

For embryo-specific research, consider these adaptations:

- Use embryo-stage-matched reference materials when possible

- Account for developmental timing as a critical covariate

- Implement cross-site standardization of embryo processing protocols

- Establish consensus on minimal quality thresholds for embryo quality metrics

By implementing these troubleshooting guides, experimental protocols, and validation procedures, researchers can effectively address data incompleteness and batch effects in multi-site embryo omic studies, ensuring robust and reproducible integration of incomplete omic profiles.

In multi-site embryo studies, integrating single-cell RNA sequencing (scRNA-seq) data from different batches or laboratories is a fundamental challenge. Batch effects—systematic technical variations—can obscure true biological signals, complicating the analysis of complex processes like embryonic development. Order-preserving batch-effect correction is a methodological advancement that maintains the original relative rankings of gene expression levels within each batch after integration. This feature is crucial for preserving biologically meaningful patterns, such as gene regulatory relationships and differential expression signals. Monotonic Deep Learning Networks, which enforce constrained input-output relationships, have emerged as a powerful tool to achieve this correction while ensuring model interpretability. This technical support article provides troubleshooting guides and FAQs to help researchers successfully implement these methods in their experiments.

FAQs & Troubleshooting Guides

Theory and Conceptual Understanding

Q1: What does "order-preserving" mean in the context of batch-effect correction, and why is it important for my embryo studies?

A: Order-preserving correction maintains the original relative rankings of gene expression levels for each gene within every cell, after correcting for batch effects [4]. In technical terms, if a gene X has a higher expression level than gene Y in a specific cell before correction, this relationship is preserved after correction.

- Why it matters: This property is vital for downstream biological analysis. Disrupting the original order of gene expression can:

- Skew Gene-Gene Correlations: Artificially alter the relationships between genes, leading to incorrect inferences about gene regulatory networks [4].

- Compromise Differential Expression: Obscure true differentially expressed genes between different embryonic stages or tissue regions [4].

- Reduce Interpretability: Make it difficult to relate the corrected data back to the original biological question.

Q2: How do Monotonic Deep Learning Networks enforce order-preservation?

A: A Monotonic Deep Learning Network is a structurally constrained neural network. It contains specialized layers or modules (e.g., an Isotonic Embedding Module) that ensure the network's output is a monotonic function of its input for specified features [29] [30]. This means that as the input value for a particular gene increases, the network's corrected output for that gene is guaranteed to either always increase or always stay the same, thereby preserving the original expression order.

Experimental Design and Setup

Q3: I am designing a multi-site embryo study. What preliminary steps can I take to facilitate effective order-preserving correction later?

A: Proactive experimental design is key.

- Metadata Collection: Meticulously record all batch-related metadata (e.g., sequencing platform, laboratory of origin, sample preparation date, technician). This information is essential for the correction model.

- Include Biological Controls: If possible, include replicate samples or control cell lines across different batches. This provides a biological ground truth to validate the correction method's performance.

- Plan for Integration: Choose a monotonic deep learning method, like a global monotonic model, that is designed for multi-batch integration from the start, rather than relying on pairwise methods that can be sensitive to the order of batch processing [4] [31].

Q4: Which specific monotonic models are available for batch-effect correction?

A: Research in this area is evolving. The table below summarizes key model types based on current literature:

| Model Type / Concept | Key Mechanism | Reference in Literature |

|---|---|---|

| Global Monotonic Model | Ensures order-preservation for all genes without additional conditions. | [4] |

| Partial Monotonic Model | Ensures order-preservation based on the same initial condition or matrix. | [4] |

| Deep Isotonic Embedding Network (DIEN) | Uses separate modules for monotonic and non-monotonic features, combining them linearly for an intuitive structure. | [29] |

| MonoNet | Employs monotonically connected layers to ensure monotonic relationships between high-level features and outputs. | [30] |

Implementation and Technical Troubleshooting

Q5: I'm getting poor clustering results after applying a monotonic correction model. What could be wrong?

A: Poor integration can stem from several issues. Use the following troubleshooting table to diagnose the problem.

| Symptom | Potential Cause | Solution |

|---|---|---|

| Low clustering accuracy (Low ARI) and distinct batch clusters. | The model is failing to mix cells from different batches. | Verify that technical differences are smaller than true biological variations (e.g., between cell types), as this is a key assumption for many methods [31]. |

| Loss of rare cell populations. | The correction method is over-smoothing the data. | Ensure the method's loss function or architecture is designed to preserve biological heterogeneity. Some methods integrate clustering with correction to protect rare cell types [31] [32]. |

| Poor preservation of inter-gene correlation. | The correction method is disrupting gene-gene relationships. | Switch to or validate with a method specifically designed to preserve inter-gene correlation, which is a strength of order-preserving approaches [4]. |

Q6: How do I quantitatively evaluate if my order-preserving correction was successful?

A: You should use a combination of metrics that assess both batch mixing and biological fidelity. The table below outlines the key metrics.

| Evaluation Goal | Metric | What it Measures | Desired Outcome |

|---|---|---|---|

| Batch Mixing | Local Inverse Simpson's Index (LISI) [4] | Diversity of batches in local cell neighborhoods. | Higher LISI score indicates better mixing. |

| Clustering Accuracy | Adjusted Rand Index (ARI) [4] [31] | Similarity between clustering results and known cell type labels. | Higher ARI indicates clusters align better with true biology. |

| Cluster Compactness | Average Silhouette Width (ASW) [4] | How similar a cell is to its own cluster compared to other clusters. | Higher ASW indicates tighter, more distinct clusters. |

| Order-Preservation | Spearman Correlation [4] | Preservation of gene expression rankings before and after correction. | Correlation close to 1 indicates perfect order preservation. |

| Inter-Gene Correlation | Root Mean Square Error (RMSE) / Pearson Correlation [4] | Preservation of correlation structures between gene pairs. | Low RMSE and High Pearson correlation indicate success. |

Data Interpretation and Biological Validation

Q7: The corrected data looks well-mixed, but my differential expression analysis yields unexpected results. What should I check?

A: This can indicate that batch effects were removed at the cost of true biological signal.

- Validate with Ground Truth: Check the expression of known marker genes for key cell types in your embryo data (e.g., markers for neural tube, somites). They should still be differentially expressed in the appropriate clusters after correction.

- Leverage Order-Preservation: Use the order-preserving property of your model to your advantage. Since the relative expression levels are maintained, you can have higher confidence that drastic changes in differential expression are due to the removal of confounding technical noise rather than an artifact of the correction process [4].

- Inspect Latent Space: Use visualization tools like UMAP or t-SNE to see if the cell types are separating based on biology rather than batch in the corrected low-dimensional embedding [32] [33].

Essential Experimental Protocols

Protocol 1: Benchmarking an Order-Preserving Correction Method

This protocol outlines steps to evaluate a new monotonic deep learning model for batch-effect correction, using established metrics.

1. Data Preprocessing:

- Input: Raw gene expression matrices from multiple batches (e.g., different embryo studies).

- Filtering: Remove low-quality cells and genes. Normalize for sequencing depth.

- Feature Selection: Identify Highly Variable Genes (HVGs) to reduce dimensionality and computational load [32].

2. Model Application:

- Setup: Configure the monotonic deep learning model (e.g., global or partial), specifying which features (genes) are subject to the monotonic constraint.

- Training: Train the model on the integrated multi-batch dataset. The model will learn to map the data to a corrected space while preserving expression orders.

3. Performance Evaluation:

- Generate Corrected Output: Obtain the batch-corrected gene expression matrix from the model.

- Calculate Metrics:

- Compute LISI and ARI on the corrected data to assess batch mixing and clustering accuracy.

- For a subset of cells, calculate the Spearman correlation between the original (non-zero) expression values and the corrected values for each gene to confirm order-preservation [4].

- Calculate the Pearson correlation for known correlated gene pairs before and after correction to assess preservation of biological relationships [4].

Protocol 2: Validating Cross-Platform Integration in Embryonic Mouse Data

This protocol describes a specific experiment to test a method's ability to integrate data from different spatial transcriptomics platforms.

1. Data Collection:

- Acquire spatially resolved transcriptomics (SRT) data of embryonic mouse tissues (e.g., at stage E11.5) from at least two different technology platforms (e.g., 10x Visium and MERFISH) [34].

2. Data Integration:

- Apply the order-preserving correction framework (e.g., a method like SpaCross [34]) to integrate the datasets. The method should perform 3D spatial registration to align coordinates and construct a unified graph.

3. Biological Validation:

- Identify Spatial Domains: Cluster the integrated data to identify anatomical structures (e.g., dorsal root ganglion, heart tube).

- Check for Conservation: Verify that known, conserved anatomical regions (e.g., neural tube) are correctly identified as single, coherent domains in the integrated data.

- Check for Specificity: Confirm that stage-specific structures are also accurately captured, demonstrating that the method preserves biological variation while removing technical noise [34].

Method Workflow and Signaling Pathways

Order-Preserving Batch Correction Workflow

The following diagram illustrates the general workflow for applying a monotonic deep learning network to correct batch effects while preserving gene expression orders, as described in the protocols.

Monotonic Neural Network Architecture

This diagram outlines the core architecture of a monotonic network (e.g., DIEN [29]), showing how it processes different types of features to ensure a monotonic output.

The Scientist's Toolkit: Research Reagent Solutions

The following table lists key computational tools and resources essential for implementing order-preserving batch effect correction.

| Item / Resource | Function / Description | Relevance to Experiment |

|---|---|---|

| Monotonic DL Frameworks (e.g., code for DIEN [29], MonoNet [30]) | Pre-built neural network architectures with monotonicity constraints. | Provides the core engine for performing order-preserving corrections without building a model from scratch. |

| scRNA-seq Analysis Suites (e.g., Scanpy in Python, Seurat in R) | Comprehensive environments for single-cell data preprocessing, visualization, and analysis. | Used for initial data QC, normalization, HVG selection, and for running downstream analyses on the corrected data. |

| Evaluation Metrics Scripts (Custom or from publications) | Code to calculate ARI, LISI, ASW, and Spearman correlation. | Essential for quantitatively benchmarking the performance of the correction method against alternatives. |

| High-Performance Computing (HPC) / GPU Access | Access to powerful computational resources. | Training deep learning models on large-scale scRNA-seq data (e.g., millions of cells) is computationally intensive and often requires GPUs. |

| Public scRNA-seq Datasets (e.g., with known batch effects) | Benchmarking data from repositories like the Human Cell Atlas. | Used as positive controls to test and validate the correction method's performance on real-world, challenging data [31] [32]. |